About our work

All cases are anonymized giving you an unfiltered look behind the scenes

All cases are anonymized giving you an unfiltered look behind the scenes

We work project-based, meaning no secondment, with full stack data teams. Our clients dictate business objectives and priorities, we dictate how to get there, how to build, and which experts are requiered to solve the challenge. Client teams will vary depending on the challenges ahead and they can source skills from other teams when required. This offers our clients the best people when they need them, and our people the opportunity to work on multiple projects and with many different experts. Our delivery framework is based on Agile, with a hybrid form of Scrum and waterfall program management. A way of working that continuously evolves and optimizes to deliver maximum value with data.

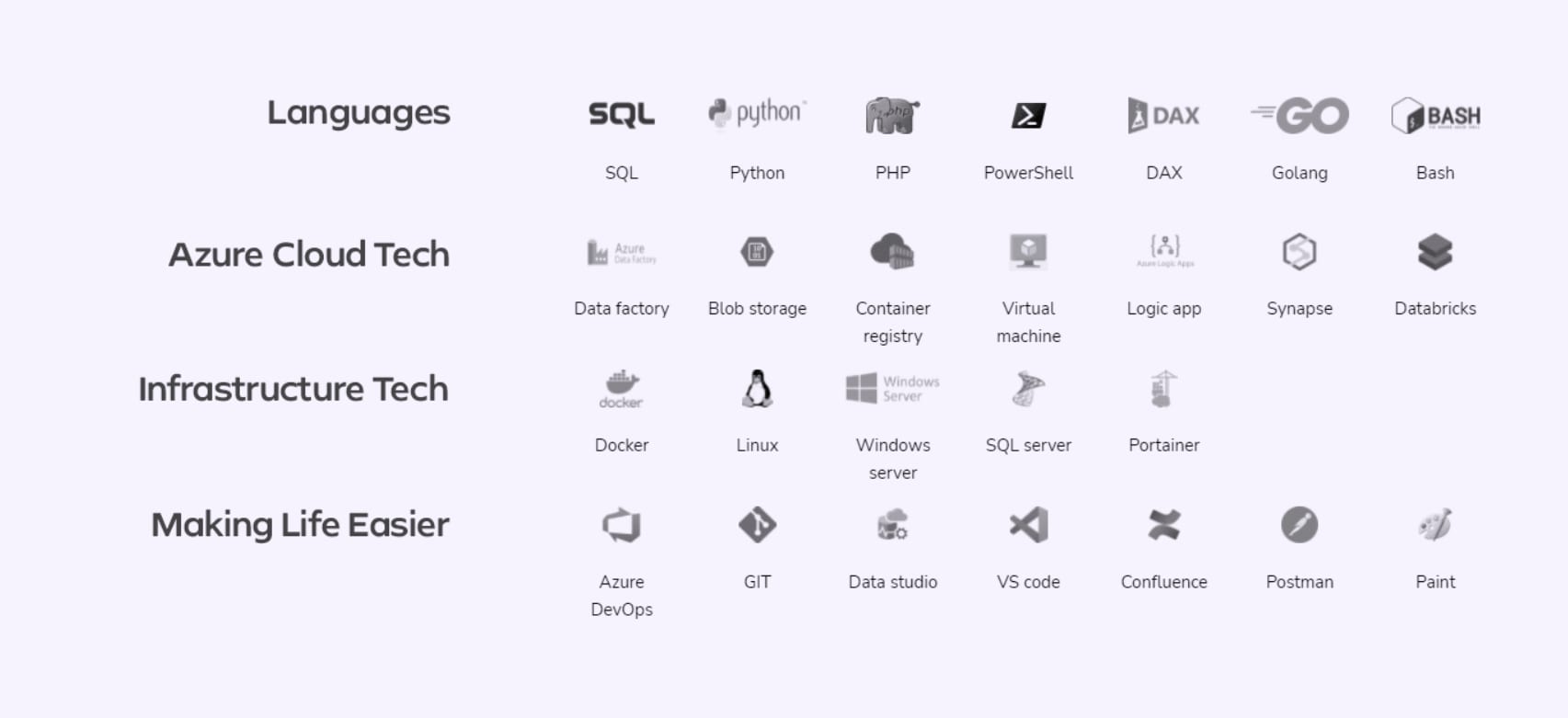

We’re pragmatists. That means that we will always work with the best tools for the job, which at this moment is the MS Azure stack. But... we're not married to Bill. We continuously try new technologies and explore new ways to do our job at its best.

The customer wants to optimize its global logistics chain and develop new products based on available data. They have asked us to set up the global data infrastructure, to implement the BI solution across all locations, to guide the organization towards a data-driven culture and to think about possible optimization opportunities using ML and AI.

ADF Azure Machine Learning Azure SQL database Azure SQL server Data Lake Dremio GIT Jupyter notebooks MS self-hosted integration runtime Power BI

- Extension of the standardized BI platform with the Finance and asset management domains (data flow, data model, reports).

- Expanding the data science team and applications.

- Rolling out the standardized BI platform across 5 new locations.

- Handle all exceptions from the new locations in the standardized BI platform.

- Implementing Master Data Management at head office level.

- Setting up and expanding a Business Intelligence Competence Center.

- We got the sponsorhip of the headoffice's C-suite for our vision on the global BI architecture;

- We built one standardized BI platform for all locations with built-in flexibility for local extensions;

- We developed one data model for all different locations;

- We developed a central BI platform where all data from the location comes together;

- We developed predictive models to predict the productivity of key business processes at the sites. This allows us to optimize the logistics chain on a global level instead of a local level.

The client is a consultancy that advises real estate companies about investment opportunities and the performance of their real estate portfolio. They expressed an ambition to become the market leader by offering the best service based on all available data. They want to take analytics for their customers more seriously so that it provides more insight and takes less time. In addition, they want to make benchmarking analyzes possible and continue to innovate with new products in the future.

Excel SQL database Logic Apps Azure Data factory Power BI

- Enriching the benchmark reporting with data from external sources such as CBS and well-known real estate systems.

- Expanding the benchmark report with landmarks on important real estate business drivers (rental price per m2, % vacancy and number of lettable units per object) in order to be able to provide proactive advice.

- Automating all actions that still take place manually. For example, enriching data from external sources.

- Expanding a data warehouse with a huge amount of data from as many sources as possible. So that they always have all the necessary data available and remain commercially ahead with the most valuable analyses.

- All data dumps supplied by external parties are now translated into a generic data model, which makes comparisons possible.

- The data from complex and polluted Excel files with strange dependencies are neatly and automatically written to a database, with a notification system if the import is not accepted.

- We have developed a benchmark report for real estate clients, whereby a real estate party can select an object (or complete portfolio) from its own portfolio to compare it with data from all other real estate parties.

The only BI employee of the customer left. At the same time, the organization had a growing demand for insight. Too much for one person. They were urgently looking for a BI partner who could professionalize, optimize and maintain the current infrastructure, and then expand it with more reports and data points.

SQL server management studio Azure Data studio Azure DevOps GIT Power BI Desktop

- Keeping the BI solution operational;

- Documenting the entire BI environment to remove dependency;

- Get feedback from the organization to develop the roadmap for the next phase.

- The first phase of our project, which mainly involved the automation of reports, has a very positive impact on the business. The customer is super enthusiastic, continues to expand the project with new questions and even introduces us to new customers;

- Test environment set up for both the data warehouse and Power BI Service;

- Excel source phased out to Data Warehouse for most important reports;

- Master Data Management model implemented for all different subsidiaries.

The client is a Dutch party that offers residential properties to private individuals. They expressed the ambition to drive the organization data-driven step by step. The current BI team was looking for a specialized BI partner who could provide additional capacity for the development of the data lake, data model and Power BI reports. During the project, the assignment was expanded with a trajectory to guide the organization towards a scrum working method.

Azure Data Factory Databricks Power BI

- Setting up reporting for the portfolio management department.

- Creating insight for key strategic pillars in the field of sustainability and energy.

- Scrum methodology introduced and refined for the entire data team;

- Current Excel reports phased out and transferred to Power BI with the intervention of a Data Lake;

- Reports including the entire underlying data flow, set up on the most important product groups for the purchasing organization.

- All data from external parties conforms to their own standard

A software company has been taken over by a major competitor. The management of the new owner wants to have insight into the most important business drivers such as new business, churn, ARR, etc. We have joined as a BI team to realize this project.

Locale SQL server on premise Power BI

- Building reports on Churn and new customers;

- Validation of numbers and calculation methods.

- All customer contract data has been made accessible and transparent;

- Existing data flow is automated;

- Complexity of calculations in reports has been reduced and all calculations have been simplified and housed in a Data Warehouse;

- We have developed a system ourselves that fills a historical model based on mutations between supplied snapshots;

- We have developed one reporting table with contract data, which is built from two different source systems from which the data does not match.

The customer wants to have an overview of the performance of all vehicles, travel movements and the goods that are transported. With full insight into their own data and the possibility to run analyzes on it, they want to drive and optimize the core business data.

Azure Data Factory SQL server Power BI Python

- Taking first steps with Machine Learning: predicting outcomes on a journey

- Continuously expand data model with new data points

- Building reporting on the movements of goods to the vehicles, sales, maintenance, cost per trip.

- Developing Snapshot Analytics

- Set up DWH with staging layer, reporting layer and Power BI connected to it;

- All reports automated with Power BI;

- Big time savings: building reports now takes hours instead of days.

- Trend analyzes delivered across all dimensions (vehicles, travel movements, goods)